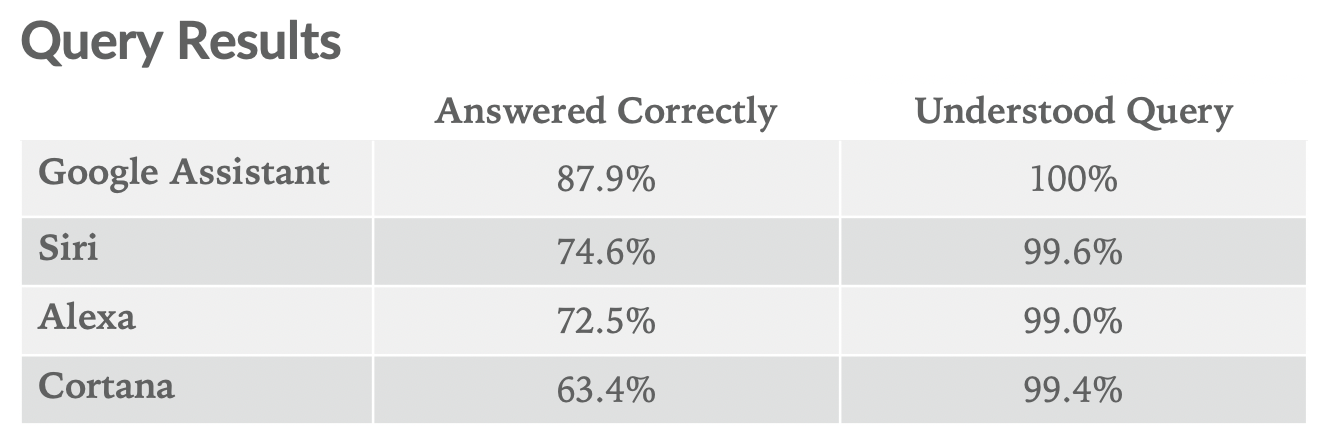

We recently tested four smart speakers by asking Alexa, Siri, Google Assistant, and Cortana 800 questions each. Google Assistant was able to answer 88% of them correctly vs. Siri at 75%, Alexa at 73%, and Cortana at 63%. Last year, Google Assistant was able to answer 81% correctly vs. Siri (Feb-18) at 52%, Alexa at 64%, and Cortana at 56%.

As part of our ongoing effort to better understand the practical use cases of AI and the emergence of voice as a computing input, we regularly test the most common digital assistants and smart speakers. This time, we focused solely on smart speakers Amazon Echo (Alexa), Google Home (Google Assistant), HomePod (Siri), and Invoke (Cortana).

See past comparisons of smart speakers here and digital assistants here. We separate digital assistants on your smartphone from smart speakers because, while the underlying tech is similar, the use cases and user experience differ greatly. Therefore, it’s less helpful to compare, say, Siri on your iPhone and Alexa on an Echo in your kitchen.

Methodology

We asked each smart speaker the same 800 questions, and they were graded on two metrics: 1. Did it understand what was said? 2. Did it deliver a correct response? The question set, which is designed to comprehensively test a smart speaker’s ability and utility, is broken into 5 categories:

- Local – Where is the nearest coffee shop?

- Commerce – Can you order me more paper towels?

- Navigation – How do I get to uptown on the bus?

- Information – Who do the Twins play tonight?

- Command – Remind me to call Steve at 2 pm today.

It is important to note that we continue to modify our question set in order to reflect the changing abilities of AI assistants. As voice computing becomes more versatile and assistants become more capable, we will continue to alter our test so that it remains exhaustive.

- Testing was conducted on the 2nd generation Amazon Echo, Google Home Mini, Apple HomePod, and Harman Kardon Invoke.

- Smart home devices tested include Wemo Mini Smart Plug, TP-Link Kasa Plug, Phillips Hue Lights, and Wemo Dimmer Switch.

Results and Analysis

Google Home continued its outperformance, answering 88% correctly and understanding all 800 questions. The HomePod correctly answered 75% and only misunderstood 3, the Echo correctly answered 73% and misunderstood 8 questions, and Cortana correctly answered 63% and misunderstood just 5 questions.

Note that nearly every misunderstood question involved a proper noun, often the name of a local town or restaurant. Both the voice recognition and natural language processing of digital assistants across the board has improved to the point where, within reason, they will understand everything you say to them.

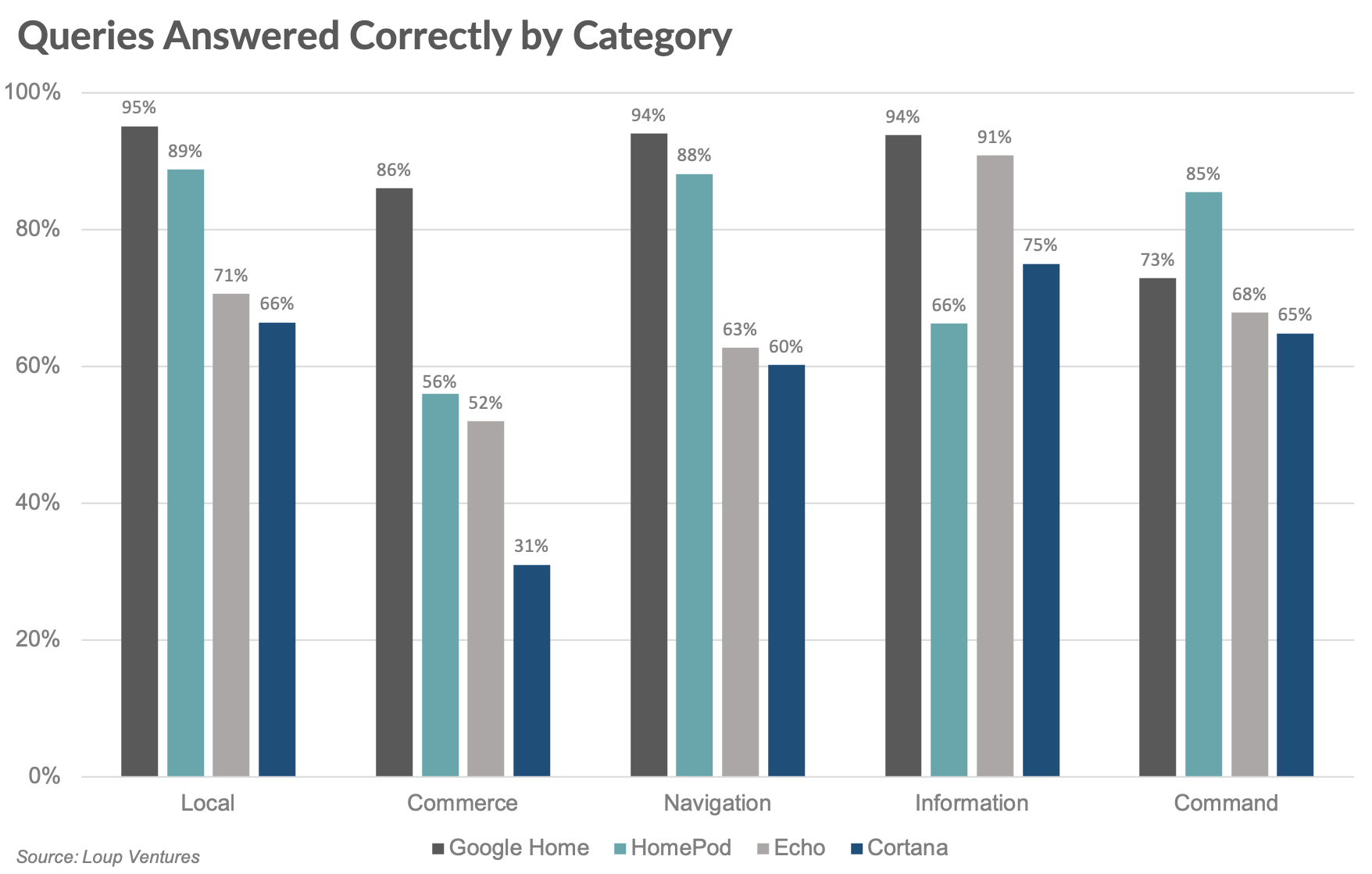

Google Home has the edge in four out of the five categories but falls short of Siri in the Command category. HomePod’s lead in this category may come from the fact that the HomePod will pass on full SiriKit requests like those regarding messaging, lists, and basically anything other than music to the iOS device paired to the speaker. Siri on iPhone has deep integration with email, calendar, messaging, and other areas of focus in our Command category. Our question set also contains a fair amount of music-related queries, which HomePod specializes in.

The largest disparity exists in the Commerce section, which conventional wisdom suggests Alexa would dominate. However, the Google Assistant correctly answers more questions about product information and where to buy certain items, and Google Express is just as capable as Amazon in terms of actually purchasing items or restocking common goods you’ve bought before.

We believe, based on surveying consumers and our experience using digital assistants, that the number of consumers making purchases through voice commands is insignificant. We think commerce-related queries are more geared toward product research and local business discovery, and our question set reflects that.

Alexa’s surprising Commerce score is best explained by an example from our test.

- Question: “How much would a manicure cost?”

- Alexa: “The top search result for manicure is Beurer Electric Manicure & Pedicure Kit. It’s $59 on Amazon. Want to buy it?

- Google Assistant: “On average, a basic manicure will cost you about $20. However, special types of manicures like acrylic, gel, shellac, and no-chip range from about $20 to $50 in price, depending on the salon.”

HomePod and Google Home stand head and shoulders above the others in both the Local and Navigation sections due to their integration with proprietary maps data. In our test, we frequently ask about local businesses, bus stations, names of towns, etc. This data is a potential long-term comparative advantage for Siri and Google Assistant. Every digital assistant can reliably play a given song or tell you the weather, but the differentiator will be the real utility that comes from contextual awareness. If you ask, “what’s on my calendar?” a truly useful answer may be, “your next meeting is in 20 minutes at Starbucks on 12th street. It will take 8 minutes to drive, or 15 minutes if you take the bus. I’ll pull up directions on your phone.”

It’s also important to note that HomePod’s underperformance in many areas is due to the fact that Siri’s ability is limited on HomePod as compared to your iPhone. Many Information and Commerce questions are met with, “I can’t get the answer to that on HomePod.” This is partially due to Apple’s apparent positioning of HomePod not as a “smart speaker,” but as a home speaker you can interact with using your voice with Siri onboard. For the purposes of this test and benchmarking over time, we will continue to compare HomePod to other smart speakers.

Improvement Over Time

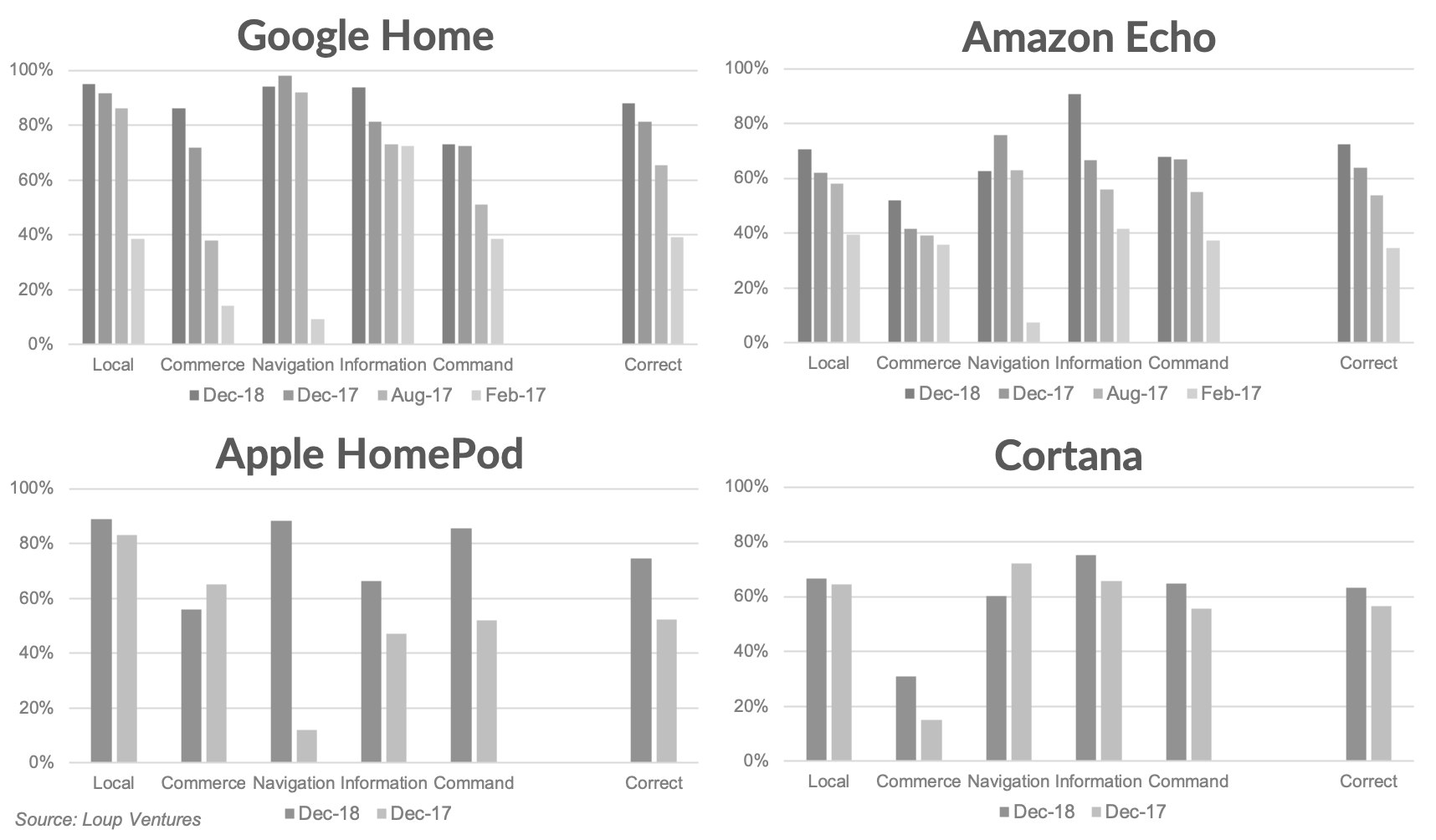

Over a 12-month period, Google Home improved by 7 percentage points, Echo by 9 points, Siri (9-month) by 22 points, and Cortana by 7 points in terms of questions answered correctly. We continue to be impressed with the speed at which this technology is making meaningful improvement. The charts below show the results of our past tests. Sequential drops in the navigation or commerce categories were caused by changes made to our questions set to reflect changing abilities and ensure our test is exhaustive.

Aside from HomePod’s 22 point increase due to the enabling of more domains in the past year, Alexa had the most noticeable improvement. The largest advancement came in the Information section, where Alexa was much more capable with follow-on questions and providing things like stock quotes without having to enable a skill. We also believe we may be seeing the early effects of the new Alexa Answers program which allows humans to crowdsource answers to questions that Alexa currently doesn’t have answers to. For example, this round, Alexa correctly answered, “who did Thomas Jefferson have an affair with?” and “what is the circumference of a circle when its diameter is 21?”

We also noticed an improvement in some specific productivity questions that had not been correctly answered before. For example, Google Assistant and Alexa are both able to contact Delta customer support and check the status of an online order. All of them except HomePod were able to play a given radio station upon request, and all four of them can read a bedtime story. These tangible use cases are ideal for smart speakers, and we are encouraged to see wholesale improvement in features that push the utility of voice beyond simple things like music and weather.

With scores nearing 80-90%, it begs the question, will these assistants eventually be able to answer everything you ask? The answer is probably not, but continued improvement will come from allowing more and more functions to be controlled by your voice. This often means more inter-device connectivity (e.g., controlling your TV or smart home devices) along with more versatile control of functions like email, messaging, or calendars.

This summer, we will test the same digital assistants on smartphones and benchmark performance in the same manner.

Disclaimer: We actively write about the themes in which we invest or may invest: virtual reality, augmented reality, artificial intelligence, and robotics. From time to time, we may write about companies that are in our portfolio. As managers of the portfolio, we may earn carried interest, management fees or other compensation from such portfolio. Content on this site including opinions on specific themes in technology, market estimates, and estimates and commentary regarding publicly traded or private companies is not intended for use in making any investment decisions and provided solely for informational purposes. We hold no obligation to update any of our projections and the content on this site should not be relied upon. We express no warranties about any estimates or opinions we make.