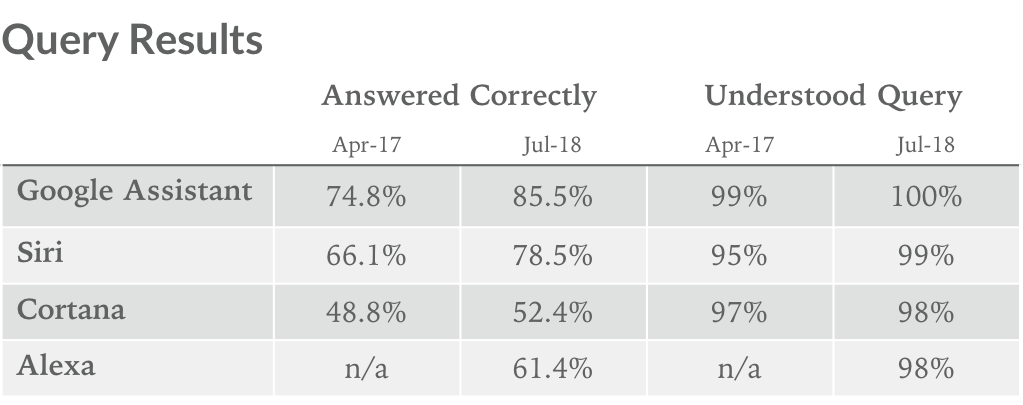

We asked Siri, Google Assistant, Alexa, and Cortana 800 questions each. This time, Google Assistant was able to answer 86% of them correctly vs. Siri at 79%, Alexa at 61%, and Cortana at 52%.

As part of our ongoing effort to better understand the practical use cases of AI and the emergence of voice as a computing input, we regularly test the most common digital assistants and smart speakers. This time, we focused solely on smartphone-based digital assistants (Siri, Google Assistant, Alexa, and Cortana). We added Alexa (on the iOS app) to the mix this round, which means, going forward, we will now test the four major voice assistants on both smartphones and smart speakers.

See past comparisons of smart speakers here and digital assistants here. While the underlying tech is similar, the use cases differ greatly between digital assistants and smart speakers (more on this next week). Therefore, it’s simply not worthwhile to compare, say, Siri on your iPhone and Alexa on an Echo Dot in your kitchen.

Methodology

We asked each digital assistant the same 800 questions, and they are graded on two metrics: 1. Did it understand what was being asked? 2. Did it deliver a correct response? The question set, designed to comprehensively test a digital assistant’s ability and utility, is broken into 5 categories:

- Local – Where is the nearest coffee shop?

- Commerce – Can you order me more paper towels?

- Navigation – How do I get to uptown on the bus?

- Information – Who do the Twins play tonight?

- Command – Remind me to call Steve at 2pm today.

It is important to note that we modified our question set before conducting this round of tests in order to reflect the changing abilities of AI assistants. As voice computing becomes more versatile and assistants become more capable, we must alter our test so that it remains exhaustive.

These changes to our question set caused sequential drops in the percentage of correct answers in the Navigation category, but the total number of correct answers was still up sequentially across the board. We are confident, however, that our new question set better reflects the AI’s ability and is well-positioned for future tests.

- Testing was conducted using Siri on iOS 11.4, Google Assistant on Pixel XL, Alexa via the iOS app, and Cortana via the iOS app.

- Smart home devices tested include Wemo mini plug, TP-Link Kasa plug, Phillips Hue Lights, and Wemo Dimmer Switch.

Results & Analysis

Google Assistant continued its outperformance, answering 86% correctly and understanding all 800 questions. Siri was close behind, correctly answering 79% and only misunderstanding 11 questions. Alexa correctly answered 61% and misunderstood 13. Cortana was the laggard, correctly answering just 52% and misunderstanding 19.

Note that nearly every misunderstood question involved a proper noun, often the name of a local town or restaurant. Both the voice recognition and natural language processing of digital assistants across the board has improved to the point where, within reason, they will understand everything you say to them.

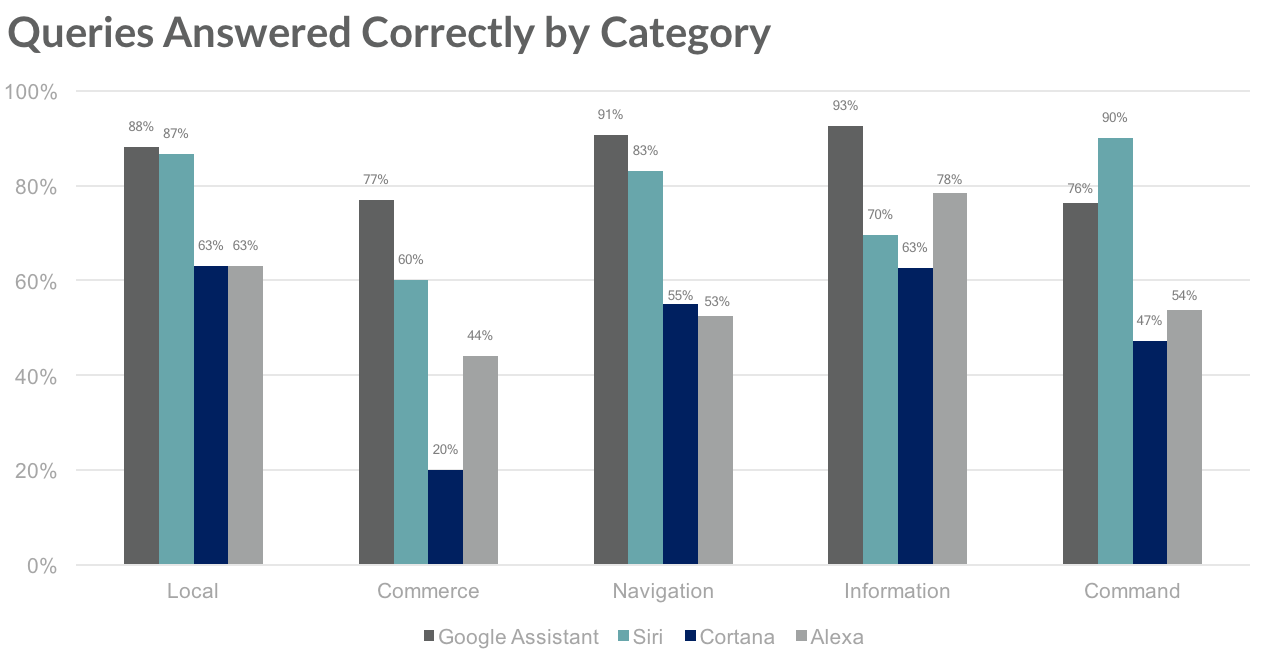

Google Assistant has the edge in every category except Command. Siri’s lead over the Assistant in this category is odd, given they are both baked into the OS of the phone rather than living on a 3rd party app (as Cortana and Alexa do). We found Siri to be slightly more helpful and versatile (responding to more flexible language) in controlling your phone, smart home, music, etc. Our question set also includes a fair amount of music-related queries (the most common action for smart speakers). Apple, true to its roots, has ensured that Siri is capable with music on both mobile devices and smart speakers.

One of the largest discrepancies between the assistants was in the Information category, where Google achieved the highest percentage of correct answers we have seen in our testing (93%). This should come as no surprise given the company’s core competencies, but the depth of answers and true usefulness impressed us.

Google Assistant’s outperformance stems largely from the search function “featured snippets.” This is the box at the top of the search results in your browser with bold text, a link to learn more, and, often times, exactly what you’re looking for. Where others may answer with, “here’s what came back from a search” and a list of links, Google is able to read you the answer. We confirm that each result is correct, but this offers a huge advantage in simple information queries (one of the most common voice computing activities).

We expected Alexa to win the Commerce category. However, many of our commerce questions involve researching products, not conducting purchases with your voice. We think our questions reflect how AI assistants are used today.

When Alexa detects a query related to a product, it will almost always present “Amazon’s Choice” for whatever it may be. For example, when asked, “where can I buy a new set of golf clubs?” Alexa responded, “Amazon’s choice for golf clubs is Callaway Women’s Strata complete set, right hand, 12-piece.” This is unhelpful because the question remains unanswered and a purchase of this type will likely require more research.

Siri and Google Assistant, with their deeply integrated maps capabilities, scored meaningfully higher in the Navigation and Local categories. Map data, especially information on local businesses, is a potential competitive advantage for these assistants. For example, every voice assistant can reliably play a given song or tell you the weather, but the differentiator will be real utility that comes from contextual awareness. If you ask, “what’s on my calendar?” a truly useful answer may be, “your next meeting is in 20 minutes at Starbucks on 12th street. It will take 8 minutes to drive, or 15 minutes if you take the bus. I’ll pull up directions on your phone.”

The iOS app versions of Cortana and Alexa are not entirely reflective of the platforms’ abilities. For example, the Alexa app’s inability to set reminders and alarms or send emails and texts, significantly impacted its performance in the Command category. These assistants require you to open an app each time you want to use them, unlike Siri or Google Assistant, which are baked into the device. The Cortana and Alexa apps are fighting an uphill battle on mobile devices.

Improvement Over Time

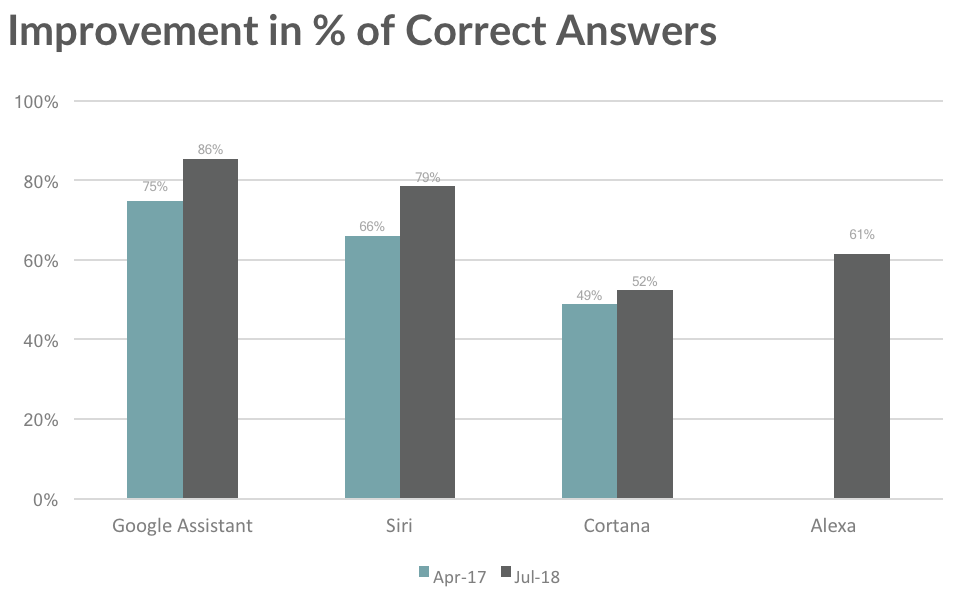

Over a 15-month period, Google Assistant improved by 11 percentage points, Siri 13 points, and Cortana only 3 points in terms of questions answered correctly. We do not have historical data on the Alexa app. We’re impressed with the speed at which the technology is advancing. Many of the issues we had just last year have been erased by improvements to natural language processing and inter-device connectivity.

With scores nearing 80-90%, it begs the question, will these assistants eventually be able to answer everything you ask? The answer is probably not, but continued improvement will come from allowing more and more functions to be controlled by your voice. This often means more inter-device connectivity (e.g., controlling your TV or smart home devices) along with more versatile control of functions like email, messaging, or calendars.

Emerging features continue to push the utility envelope

Voice computing is about removing friction. One new feature that accomplishes that is continued conversation, which allows you to ask multiple questions without repeating the wake word each time. Routines and smart home “scenes” remove friction by performing multiple actions with on command. For instance, you can say “good morning,” and your assistant will turn on the lights, read you the weather, and play your favorite playlist. Siri Shortcuts, coming with iOS 12 in the fall, will allow you to create mini automations (or more versatile routines) that can be triggered with your voice. We are eager to test that feature when it arrives.

Payments and ride-hailing are two use cases where voice is particularly applicable, given they require little to no visual output to carry out. This time, we were only able to successfully hail a ride on Siri and Alexa, and only able to send money with Siri and Google Assistant. Going forward, we expect both of these features to be a standard capability.

This fall, we will test smart speakers, including Amazon Echo, Google Home, Cortana, and HomePod. The distinction between digital assistants on your phone and smart speakers in your home will be made more clear in a note next week detailing our thoughts on the state of voice computing and its practical use going forward.

Disclaimer: We actively write about the themes in which we invest: virtual reality, augmented reality, artificial intelligence, and robotics. From time to time, we will write about companies that are in our portfolio. Content on this site including opinions on specific themes in technology, market estimates, and estimates and commentary regarding publicly traded or private companies is not intended for use in making investment decisions. We hold no obligation to update any of our projections. We express no warranties about any estimates or opinions we make.